Becoming a superhero...

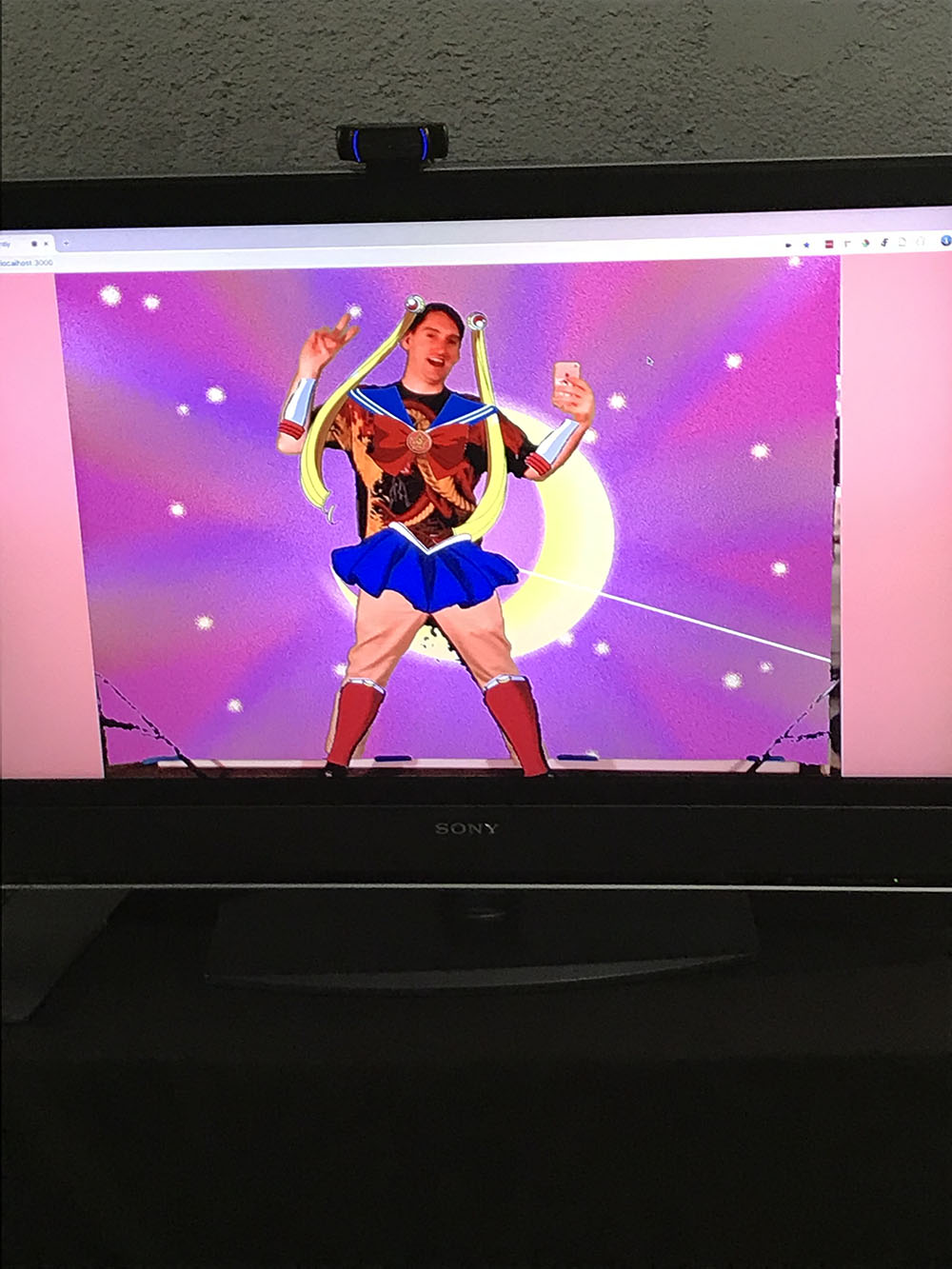

I wanted to create an experience to help people feel strong, powerful, and confident. For this installation, I used machine learning to let participants experience iconic anime superhero Sailor Moon’s transformation process.

My Heart, My Body

Using technology to turn people into superheroes

Background

An immersive, interactive installation created for Gray Area in San Francisco

… through a spectacular transformation

When called on to protect her friends, Sailor Moon changes from a normal student into a superhero via a spectacular transformation sequence. To replicate this, I wanted participants to gradually become Sailor Moon by striking three different poses. With each pose, they would see themselves wearing more of Sailor Moon’s classic costume.

Process

Expanding my skills with machine learning and JavaScript libraries

p5.js / PoseNet / TensorFlow.js

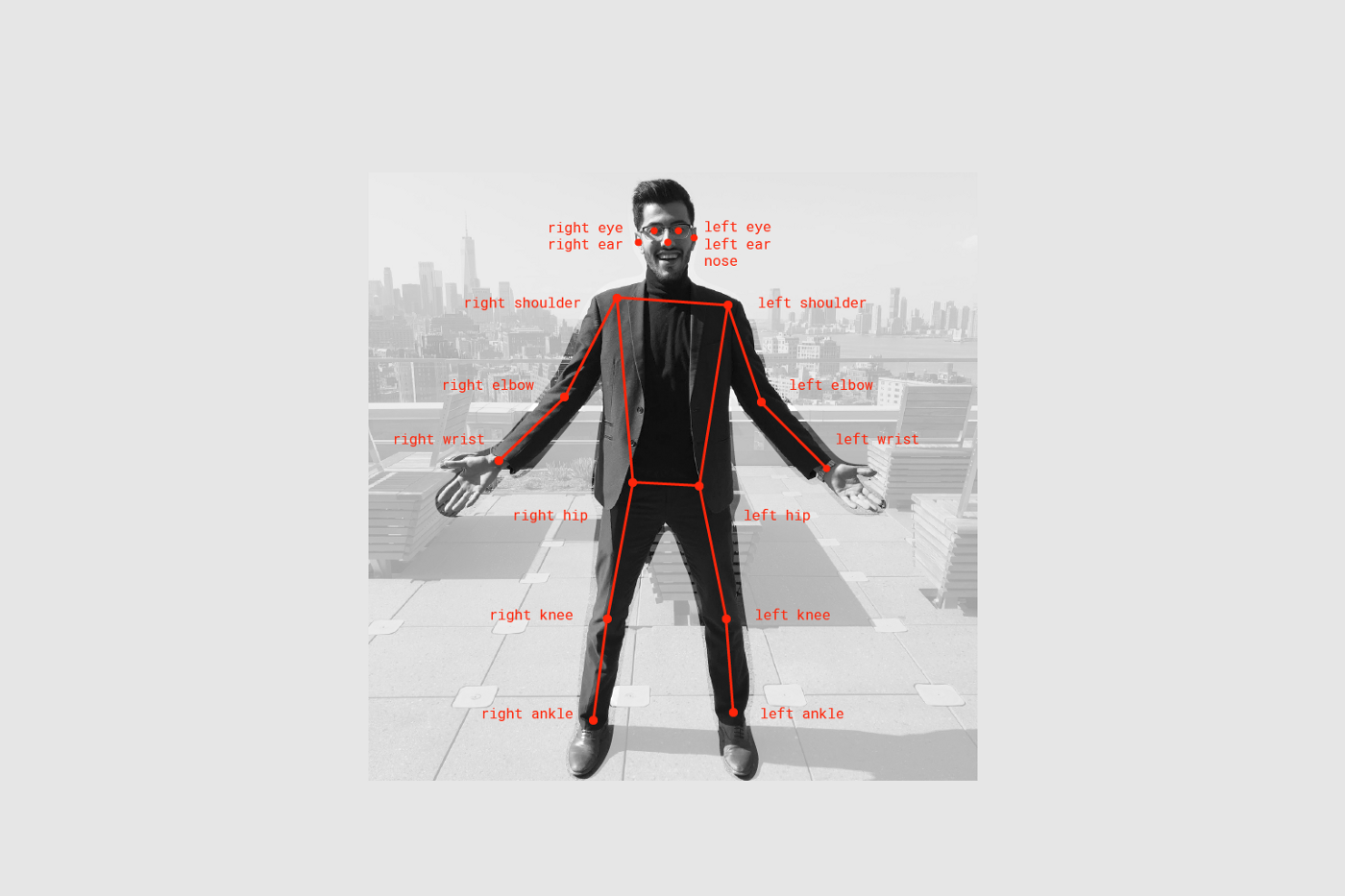

I built the web app’s main framework in p5.js, which provides a canvas for the various elements to live in. To understand when participants struck a certain pose, I employed PoseNet, a JavaScript library that runs real-time pose estimation in the browser. When PoseNet’s machine learning model detects a person, it creates data points around their face and body. I was able to use these data points to read participants’ positions and place clothing in the appropriate spot.

Using a single data point, I could make relatively accurate placements. But I wanted the superhero costume to move along with participants, which would require rotation and scaling.

The distance between points

To achieve this, I implemented scaling based on the distance between two points: for example, the size of the gloves was calculated based on the distance between a participant’s wrist point and elbow point.

I used a similar process to determine rotation. By calculating the angle between two points, I could determine the correct rotation: the hair buns, for example, were rotated based on the angle between participants’ eye points.

I wanted participants to control their own transformation experience by striking a series of poses. To do that, I needed PoseNet to recognize specific poses on people with a wide range of body types.

A diverse data set

I worked with friends to make a diverse data set of poses, which I captured as a large JSON set and fed back into PoseNet. Then, I set up conditional functions so the web app would look for one pose after another, and prevent past poses from triggering.

Conclusion